Blend by Cursor

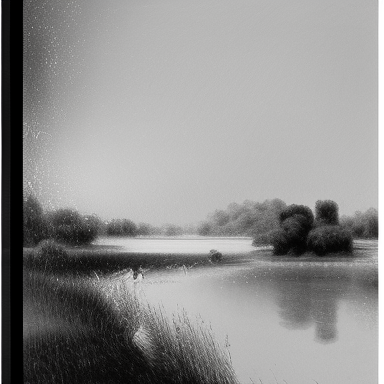

I collaborated with Cursor and created an image blending tool using open-source neural network weights. The result is a Python project that uses two approaches to merge multiple images into a single output. Working with Cursor was an interesting experience. The tool helped accelerated the implementation of both blending methods, from setting up the neural network pipelines to optimizing for different hardware backends (including Apple Silicon MPS support).

Method 1: Latent Space Blending

This approach works directly in the latent space of Stable Diffusion’s Variational Autoencoder (VAE). It encodes images into a compressed latent representation, averages them (or take the max) together, and decodes back to pixel space.

Method 2: Semantic Text Blending

This approach has three steps.

- Vision-Language Model: BLIP generates text descriptions of each input image

- LLM Text Generation: GPT-2 synthesizes these descriptions into a coherent artistic prompt

- Text-to-Image Generation: Stable Diffusion creates the final blended image from this creative prompt

The results are not as impressive as Blend which used Midjourney. Here we use smaller models that can run on a MacBook Air.

Check out the full implementation and try it yourself here.